A friend called me last week, excited. He wants to put some money into “AI.” When I asked what specifically, he said, “You know, NVIDIA.” I told him there’s more to AI than NVIDIA and OpenAI (which is private).

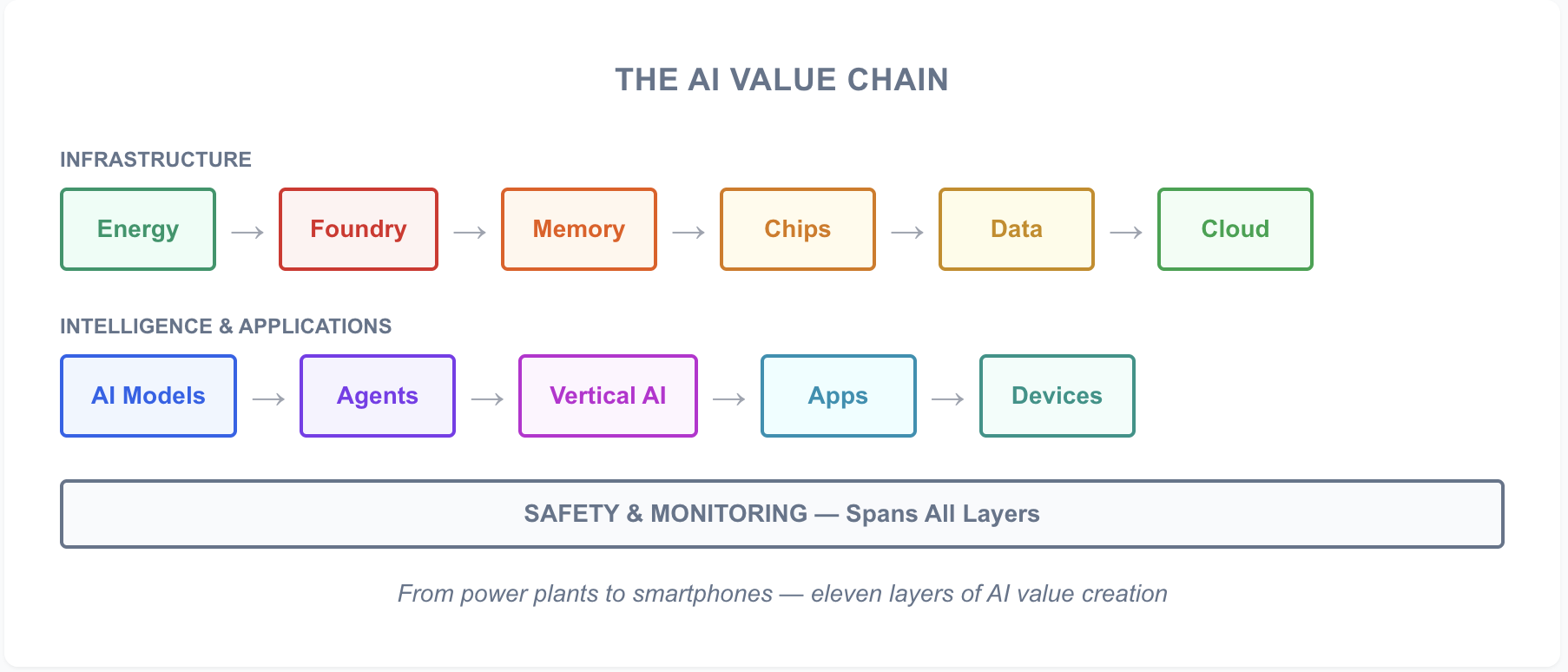

Everyone is asking the wrong question. “Should I invest in AI?” assumes AI is one thing. It’s not. It’s eleven different businesses stacked on top of each other—and the money flows very differently through each one.

The landscape has shifted dramatically in just a few years. When I graduated from NYU Stern’s M.S. in Analytics and AI in 2019, we were still relying on RNN Deep Learning to predict the next word. At the time, traditional machine learning using structured data was the enterprise standard. Few could have predicted that Large Language Models (LLMs) would evolve so rapidly into the force they are today.

As an angel investor who has backed dozen+ AI and software companies alongside firms like FirstMark Capital and Bain Capital Ventures, I’ve spent months mapping this landscape. This guide breaks down each layer—who’s winning, who’s challenging, and where the opportunities are.

The Eleven Layers

Think of AI like a building. NVIDIA makes the steel beams, but someone has to pour the foundation, wire the electricity, and design the rooms people actually live in. Each layer has different companies, different profit margins, and different risks.

What Each Layer Does

| Layer | What It Is |

|---|---|

| Energy & Power | The electricity that keeps AI running. Data centers consume as much power as small cities. Companies build power plants, cooling systems, and grid connections. |

| Chip Manufacturing | The factories that make AI chips. Most chip companies design chips but don’t make them—they send designs to manufacturers like TSMC. |

| Memory | The short-term storage that feeds data to chips. AI chips are fast, but useless without high-speed memory to keep up. |

| Processors | The chips that do the actual computing. This is NVIDIA’s territory—the GPUs that train and run AI models. |

| Data Platforms | The systems that store, organize, and prepare data for AI. Models are only as good as the data they learn from. |

| Cloud & Inference | Renting AI computing power over the internet. Inference is running trained models to get answers—every ChatGPT response is inference. |

| AI Models | The brains—software that can write, reason, and create. ChatGPT, Claude, and Gemini live here. |

| AI Agents | AI that takes action, not just answers questions. Instead of telling you how to book a flight, it books the flight for you. |

| Vertical AI | AI built for specific industries like healthcare, legal, or finance. Often outperforms general AI on specialized tasks. |

| Applications | Products people actually use—coding assistants, customer service bots, search tools, robots. |

| Devices | Hardware that runs AI locally—smartphones, smart glasses, robots. AI without needing the cloud. |

| Safety | Tools that prevent AI from misbehaving—blocking harmful content, detecting manipulation, ensuring compliance. |

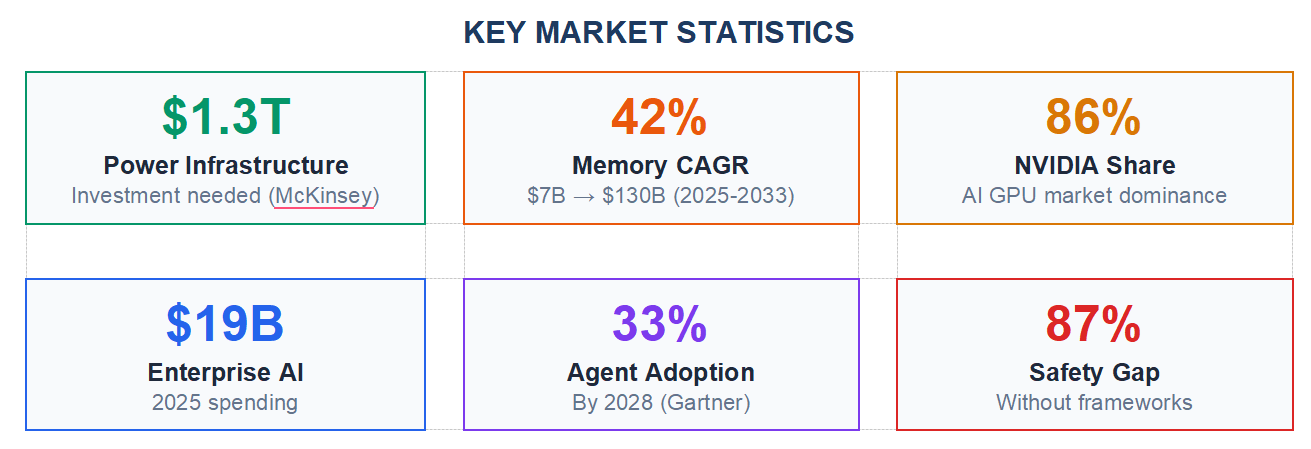

Key market Stats

Let’s walk through each layer.

1. Energy & Power

Before AI can think, it needs electricity—lots of it. A single AI data center can consume as much power as a small city. And here’s the problem: in places like Virginia, getting connected to the power grid now takes up to seven years.

You could have unlimited money, the best engineers, and contracts for thousands of NVIDIA chips—but if you can’t plug in, none of it matters. Power has become the biggest bottleneck in AI.

McKinsey estimates $1.3 trillion will flow into power infrastructure for AI over the coming years. GE Vernova has emerged as a dominant player, locking up contracts to supply 80 gigawatts of power generation—enough to power tens of millions of homes. They’re sold out through 2028. Other companies are betting on nuclear (Oklo), geothermal (Fervo), and converting waste gas into computing power (Crusoe).

Competitive Landscape

| Company | Position | Key Strengths | Investment Thesis |

|---|---|---|---|

| GE Vernova | Leader | 80 GW in contracts; partnerships with Amazon, Chevron | Sold out through 2028; essential infrastructure |

| Vertiv | Leader | Cooling systems; power distribution | Every data center needs cooling |

| Schneider Electric | Leader | Energy management software and hardware | Global scale; efficiency focus |

| Eaton | Challenger | Power management; backup systems | Diversified industrial player |

| Quanta Services | Challenger | Builds power grid infrastructure | Benefits from grid expansion |

| Oklo | Emerging | Small nuclear reactors; backed by Sam Altman | Long timeline but massive potential |

| Crusoe Energy | Emerging | Converts waste gas to AI computing power | Unique model; environmentally friendly |

| Fervo Energy | Emerging | Geothermal power; Google partnership | 24/7 clean energy |

2. Chip Manufacturing (Foundries)

Here’s something most people don’t realize: NVIDIA doesn’t make its own chips. Neither does AMD, Apple, or most other chip companies. They design them, then send the designs to a foundry—a company that actually manufactures the physical chips.

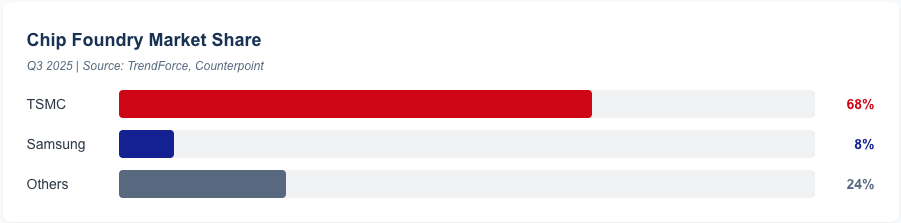

One company dominates this layer: TSMC (Taiwan Semiconductor Manufacturing Company). They control over 60% of the global market and over 90% of the most advanced chips. TSMC wins no matter which AI company succeeds—they make chips for NVIDIA, AMD, Apple, and almost everyone else.

CHIP FOUNDRY MARKET SHARE

The risk? TSMC is based in Taiwan, which creates geopolitical concerns. That’s why Intel is trying to build up American manufacturing with help from the U.S. government’s CHIPS Act.

Competitive Landscape

| Company | Position | Key Strengths | Investment Thesis |

|---|---|---|---|

| TSMC | Dominant Leader | 60%+ market share; makes chips for everyone | Wins regardless of which AI company succeeds |

| Samsung Foundry | Challenger | Investing heavily to catch up | Discount to TSMC; improving quality |

| Intel Foundry | Turnaround Play | $8B+ in U.S. government funding | American alternative; high execution risk |

3. Memory

This might be the most underappreciated layer in the entire stack. AI chips need special high-speed memory to function. Without it, even the fastest processor sits idle, waiting for data.

The market for this specialized memory is projected to grow from $7 billion in 2025 to $130 billion by 2033—a growth rate of 42% per year. Only three companies make it: SK hynix, Samsung, and Micron. This tight supply gives them significant pricing power.

SK hynix is NVIDIA’s preferred supplier and controls over half the market. If you believe AI will keep growing, memory is a quieter but potentially more stable bet than the chip makers themselves.

Competitive Landscape

| Company | Position | Key Strengths | Investment Thesis |

|---|---|---|---|

| SK hynix | Leader | NVIDIA’s preferred supplier; 50%+ market share | Technology lead; strong margins |

| Samsung | Challenger | Investing to catch up; massive scale | Improving yields; vertical integration |

| Micron | Challenger | Only U.S.-based player; diversified business | Domestic supply chain advantage |

| Astera Labs | Emerging | Makes connectivity chips for memory systems | Critical enabling technology |

4. Processors (The NVIDIA Layer)

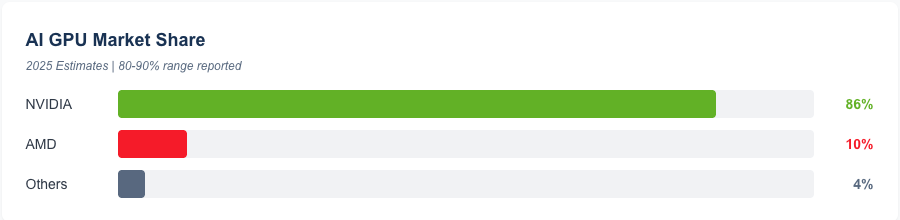

This is the layer everyone knows. NVIDIA controls 86% of the AI chip market. Their data center business generated $80 billion in revenue in just the first nine months of 2025. Profit margins exceed 75%—extraordinarily high for a hardware company.

But NVIDIA’s real advantage isn’t just the hardware—it’s CUDA, their software platform. Every AI researcher learned on CUDA. Every major AI framework is built for CUDA. Switching away would take years of engineering work. This software lock-in is what makes NVIDIA’s position so durable.

Challengers exist. AMD offers cheaper alternatives and has won business from Microsoft and Meta. Startups like Cerebras ($8.1B valuation), Groq ($6.9B), and Tenstorrent ($2.6B, led by legendary chip designer Jim Keller) are building alternative architectures. But unseating NVIDIA will be extremely difficult.

Competitive Landscape

| Company | Position | Key Strengths | Investment Thesis |

|---|---|---|---|

| NVIDIA | Dominant (86%) | Industry standard; CUDA software platform | Premium valuation but earnings to match |

| AMD | Challenger | Cheaper alternative; Microsoft/Meta wins | Gaining share; software gap closing |

| Intel | Turnaround | New AI chips; huge R&D budget | Deep discount; execution risk |

| Cerebras | Emerging | Unique wafer-scale chip design; $8.1B valuation | Novel approach; also offers inference cloud |

| Groq | Emerging | Fastest speeds; $6.9B valuation (acquired by Nvdia for $20B) | Speed leader; specialized focus |

| Tenstorrent | Emerging | Open architecture; Jim Keller CEO; $2.6B | Backed by Samsung, LG, Jeff Bezos |

5. Data Platforms

AI models are only as good as the data they’re trained on. This layer includes companies that store, organize, label, and manage the massive datasets that feed AI systems.

Scale AI hit a $29 billion valuation after Meta invested $14.3 billion for a 49% stake in June 2025—but that deal created problems. OpenAI and Google are walking away, worried about their data flowing to a Meta-aligned company.

Databricks ($62 billion valuation after raising $10 billion) is building what could become the operating system for enterprise AI—handling everything from data storage to model deployment. Snowflake is racing to catch up with AI features on top of its massive existing customer base.

Competitive Landscape

| Company | Position | Key Strengths | Investment Thesis |

|---|---|---|---|

| Databricks | Leader | $62B valuation; end-to-end AI platform | Becoming the enterprise AI operating system |

| Snowflake | Leader | Huge enterprise customer base; adding AI | Distribution advantage; playing catch-up on AI |

| Scale AI | Leader | $29B valuation; Meta owns 49% | Customer conflicts from Meta deal |

| Pinecone | Challenger | Leading database for AI search | Critical infrastructure for AI apps |

| Weaviate | Emerging | Open-source database for AI | Developer favorite; rapid adoption |

6. Cloud & Inference

This layer is about running AI models at scale. Every time you ask ChatGPT a question, that’s inference—running a trained model to get an answer. Training builds the AI brain; inference is the brain thinking.

Amazon (AWS), Microsoft (Azure), and Google (GCP) have dominated cloud computing for years. But AI workloads are different—they need specialized infrastructure. A new breed of “AI-native” cloud providers is emerging, focused specifically on running AI models fast and cheap.

CoreWeave (NASDAQ: CRWV) went public in March 2025 at $40 per share and quickly became the first major AI infrastructure IPO of the year. They can spin up AI computing resources 35 times faster than traditional clouds. Microsoft signed a $10 billion deal with them. Cerebras has launched multiple inference data centers across North America and Europe that can deliver answers faster than anyone else.

Competitive Landscape

| Company | Position | Key Strengths | Investment Thesis |

|---|---|---|---|

| AWS/Azure/Google | Hyperscale Leaders | Global scale; compliance; bundled services | Two-thirds of market; AI driving growth |

| CoreWeave | AI Cloud Leader | Public (CRWV); 35x faster; Microsoft $10B deal | First major AI infra IPO of 2025 |

| Cerebras | Inference Leader | Multiple data centers; fastest inference speeds | Wafer-scale chips; unique architecture |

| Lambda Labs | Challenger | $1.5B raised; developer-friendly | Research community favorite |

| Groq | Challenger | Ultra-fast inference; $6.9B valuation | Speed optimized; growing cloud offering |

| Modal | Emerging | Easy-to-use serverless AI computing | Growing fast among developers |

| Together AI | Emerging | Hosts open-source AI models | Ecosystem play |

7. AI Models

This is ChatGPT territory—the large AI models that can write, reason, and create. Training these models costs hundreds of millions of dollars. OpenAI plans to spend over $200 billion through 2030.

The leaders are well-known: OpenAI (ChatGPT, $500B valuation as of October 2025, reportedly in talks to raise at $750B+), Anthropic (Claude, $183B valuation with Microsoft and NVIDIA investments pushing it higher), and Google (Gemini). Meta gives away its Llama models for free, betting that open-source will win.

The risk: A Chinese company called DeepSeek recently released models nearly as good as the leaders at a fraction of the cost. If AI models become commodities, value shifts to applications that use them—not the models themselves.

Competitive Landscape

| Company | Position | Key Strengths | Investment Thesis |

|---|---|---|---|

| OpenAI | Leader | ChatGPT; 200M+ users; Microsoft partnership | ~$500B valuation; talks at $750B+ |

| Anthropic | Leader | Claude; safety focus; Amazon/Google/Microsoft backed | $183B valuation; NVIDIA investing |

| Leader | Gemini; integrated into Search and Cloud | Distribution through Search and Gmail | |

| Meta | Challenger | Llama (free/open-source); 3.5B daily users | Betting open-source wins |

| xAI | Challenger | Grok; Elon Musk; integrated with X/Tesla | Access to real-time data; rapid scaling |

| Mistral | Challenger | European; efficient models; $6B valuation | EU regulatory advantage |

| DeepSeek | Emerging | Near-frontier quality at low cost; Chinese | Commoditization threat |

8. AI Agents

This is the layer I’m most excited about. We’re moving from AI that answers questions to AI that completes tasks autonomously. Instead of asking ChatGPT “how do I book a flight,” you tell an AI agent “book me the cheapest flight to Miami next Tuesday” and it actually does it.

Gartner predicts 33% of business software will have these capabilities by 2028, up from under 1% today.

LangChain has become the standard toolkit for building AI agents, used by companies like LinkedIn and Uber. CrewAI (60% of Fortune 500 companies) focuses on teams of AI agents working together. Microsoft is building agent capabilities directly into Office 365.

Competitive Landscape

| Company | Position | Key Strengths | Investment Thesis |

|---|---|---|---|

| LangChain | Leader | Industry standard; LinkedIn/Uber use it | 80,000+ developers; strong ecosystem |

| CrewAI | Challenger | 60% of Fortune 500; $18M raised | Easiest to get started; enterprise traction |

| Microsoft | Challenger | Building into Office 365; Azure integration | Distribution through Windows/Office |

| Emerging | Agent protocol with 50+ partners | Could become industry standard | |

| Cognition (Devin) | Emerging | AI software engineer; $2B valuation | Can write code autonomously |

9. Vertical AI (Industry-Specific)

General-purpose AI models like ChatGPT are impressive, but specialized AI tools often work better for specific industries. A medical AI trained on millions of clinical notes outperforms ChatGPT on diagnosis suggestions by 35-50%.

Healthcare, legal, and finance are leading adopters because they can’t send sensitive data to OpenAI’s servers. They need AI that runs on their own systems.

Vertical AI companies raised $3.5 billion in 2025—triple the prior year. Harvey (legal) tripled its valuation to $8 billion in December 2025 after raising $760 million across three rounds this year alone. Abridge (medical documentation, $5.3B valuation) is leading in healthcare. The sector represents $1.5 billion of AI spending.

Competitive Landscape

| Company | Position | Key Strengths | Investment Thesis |

|---|---|---|---|

| Harvey | Leader (Legal) | $8B valuation; 50 of top AmLaw 100 firms | $760M raised in 2025; $100M+ ARR |

| Abridge | Leader (Healthcare) | $5.3B valuation; medical documentation | Epic integration; doctors love it |

| Hippocratic AI | Emerging (Healthcare) | $3.5B valuation; patient communication | Safety-focused; clinical validation |

| Tennr | Emerging (Healthcare) | $605M valuation; medical billing automation | Clear ROI for healthcare providers |

10. Applications

This is where AI meets everyday users. Enterprise spending on AI applications hit $19 billion in 2025. At least 10 AI products now generate over $1 billion in annual revenue.

Coding tools are the breakout category—$4 billion in spending. Cursor (AI-powered code editor) reached a $29.3 billion valuation after raising $2.3B in November 2025, with over $1 billion in annual revenue. GitHub Copilot is used by 40% of developers. Even Anthropic’s Claude Code hit $1 billion in run-rate revenue within months of launch.

Customer service is another hot area. Sierra (founded by former Salesforce co-CEO Bret Taylor) hit $10 billion valuation—their AI resolves customer issues better than human agents.

Watch robotics: Goldman Sachs projects 50,000-100,000 humanoid robots shipped in 2026. Figure AI ($2.6B valuation) has a BMW partnership. Costs are expected to fall from $35,000 to under $15,000 within a decade.

Competitive Landscape

| Company | Position | Key Strengths | Investment Thesis |

|---|---|---|---|

| GitHub Copilot | Leader (Coding) | 40% developer adoption; Microsoft | $100M+ annual revenue |

| Cursor | Leader (Coding) | $29.3B valuation; $1B+ ARR; Nov 2025 | Fastest-growing AI coding tool |

| Sierra | Leader (Support) | $10B valuation; Bret Taylor founded | Better than human agents |

| Glean | Leader (Search) | AI search for companies; $150M raised | Microsoft alternative |

| Figure AI | Emerging (Robotics) | $2.6B valuation; BMW partnership | Humanoid robots; costs falling fast |

11. Devices

AI is moving from the cloud to your pocket. Every new smartphone has a dedicated AI chip. Meta’s Ray-Ban smart glasses sold over 2 million units in 2024—shipments up 210% from the prior year.

The big shift: AI that runs locally on your device, without sending data to the cloud. Apple calls this “Apple Intelligence.” Qualcomm’s latest chips can run AI models directly on your phone.

This matters for privacy (your data stays on your device) and speed (no waiting for cloud responses). The edge AI market is projected to reach $59 billion by 2030.

Competitive Landscape

| Company | Position | Key Strengths | Investment Thesis |

|---|---|---|---|

| Qualcomm | Leader | AI chips in most Android phones | Every smartphone becomes an AI device |

| Apple | Leader | Neural Engine; Apple Intelligence | Privacy focus; ecosystem integration |

| NVIDIA Jetson | Leader | AI chips for robots and industrial use | Dominates robotics/automotive |

| Meta Ray-Ban | Challenger | Smart glasses; 2M+ sold; AI assistant | Post-smartphone interface |

| Hailo | Emerging | Ultra-efficient AI chips for devices | Power efficiency leader |

12. Safety & Monitoring (Horizontal Layer)

This layer spans everything above. As AI gets more powerful, companies need tools to keep it from going off the rails—preventing harmful outputs, detecting when AI is being manipulated, and ensuring compliance with regulations.

According to Gartner, 87% of companies don’t have comprehensive AI safety frameworks. The EU’s AI Act is forcing companies to take this seriously.

NVIDIA’s NeMo Guardrails is becoming an industry standard. Startups like Lakera (prevents manipulation attacks) and Patronus AI (detects AI hallucinations) are growing fast.

Competitive Landscape

| Company | Position | Key Strengths | Investment Thesis |

|---|---|---|---|

| NVIDIA NeMo | Leader | Safety guardrails; prevents misuse | Becoming enterprise standard |

| Datadog | Challenger | AI monitoring built into existing product | Huge existing customer base |

| Lakera | Emerging | Prevents manipulation attacks | Security-first focus |

| Patronus AI | Emerging | Detects AI hallucinations | Critical for enterprise trust |

The Investment Framework

After mapping all eleven layers, here’s how I think about AI investing:

- Infrastructure first. TSMC, the memory companies, and power infrastructure have clearer business models than most AI application companies. They win regardless of which AI model succeeds. Energy is the new bottleneck—$1.3 trillion will flow there.

- Memory is the sleeper pick. Growing 42% annually with only three suppliers. SK hynix, Samsung, and Micron benefit from AI growth without the volatility of picking winners at the model layer.

- Vertical beats horizontal. Industry-specific AI (healthcare, legal, finance) shows higher accuracy, clearer return on investment, and stronger competitive moats than general-purpose tools. Harvey and Abridge are proving this.

- Watch the middleware. LangChain, CrewAI, and safety tools are becoming essential plumbing. They capture value regardless of which models dominate.

- Robotics is next. Physical AI—robots that can see, think, and act—is where software meets the real world. Costs are falling fast. High risk but massive potential.

The Bottom Line

The AI value chain isn’t one bet—it’s eleven. The winners will either control critical chokepoints (TSMC’s manufacturing, NVIDIA’s software, the memory oligopoly) or build deep integration into specific workflows that makes switching painful.

For investors, diversification across layers makes sense: infrastructure for stability, memory and energy for the new bottlenecks, AI-native clouds for growth, vertical applications for defensibility, and agents for the next wave.

My friend who wanted to invest in “AI” now has a framework. NVIDIA is one answer. But it’s not the only one—and depending on your risk tolerance and time horizon, it may not be the best one.

The question isn’t whether AI will transform the economy. It’s which of these eleven layers will capture the most value from that transformation.

- • •

Sources and References

Market Research: IDC, Gartner, McKinsey, Goldman Sachs, Bank of America, Bloomberg Intelligence, IEA

Industry Reports: Menlo Ventures State of Generative AI 2025, Bessemer State of the Cloud, a16z, Stanford HAI, SemiAnalysis, Deloitte

News: Financial Times, Bloomberg, The Information, TechCrunch, Wall Street Journal, CNBC, Reuters

Company Sources: SEC filings, earnings reports and press releases from TSMC, NVIDIA, SK hynix, Samsung, Micron, GE Vernova, Databricks, Anthropic, OpenAI, Harvey, Abridge, Cursor, Sierra, Figure AI, CoreWeave, Cerebras, CrewAI, and others cited

Disclaimer: This analysis is for informational purposes only and does not constitute investment advice. Market data reflects publicly available sources as of December 2025. Valuations change rapidly. Past performance is not indicative of future results.